Kubernetes Ingress Troubleshooting: Error Obtaining Endpoints for Service

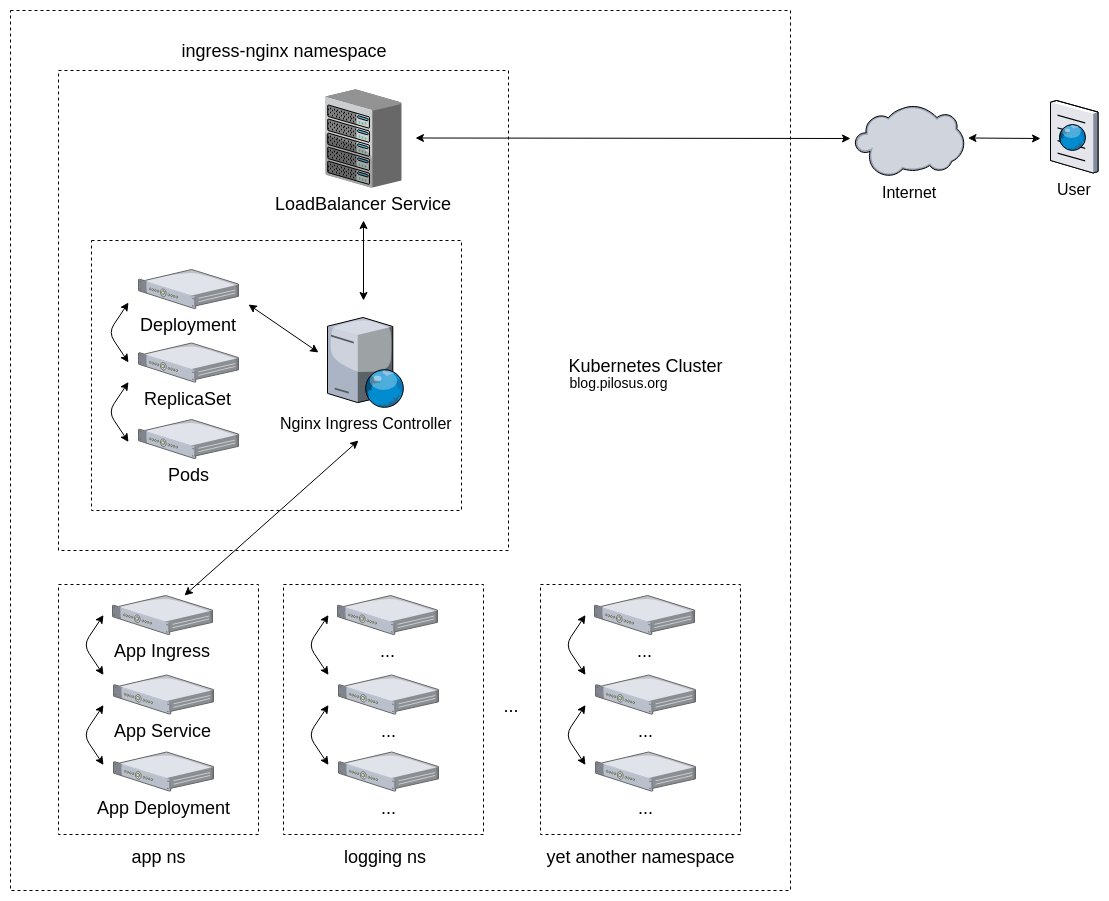

Recently I’ve set up an Nginx Ingress Controller on my DigitalOcean Kubernetes cluster. It’s make up of a replica set of pods that run an Nginx web server and watch for Ingress resource deployment. Controller also fires up a LoadBalancer service that routes and balances external traffic to the Nginx pods. In turn Nginx pods route traffic to your app pods in accordance with rules from app’s Ingress manifest. All in all, the whole topology is the following:

The problem is Kubernetes uses quite a few abstractions (Pods, Deployments, Services, Ingress, Roles, etc.) so that it’s easy to make a mistake. The good news is Kubernetes gives you great tools to troubleshoot problems you have bumped into. In my case the first response I’ve got after I set up an Ingress Controller was Nginx’s 503 error code (service temporarily unavailable). Here is how I’ve fixed it.

Kubernetes Nginx Ingress Controller Troubleshooting

Let’s assume we are using Kubernetes Nginx Ingress Controller as there are other implementations too. Its components get deployed into their own Namespace called ingress-nginx.

The first thing you are going to see to find out why a service responds with 503 status code is Nginx logs. Let’s see a list of pods with Nginx:

$ ~ kubectl get pods --namespace ingress-nginx NAME READY STATUS RESTARTS AGE nginx-ingress-controller-5694ccb578-l82hc 1/1 Running 0 21h

Having only a signle pod it’s easier to skim through the logs with kubectl logs. If there were multiple pods it would be much more convenient to have ELK (or EFK) stack running in the cluster.

Looking up the log we see:

$ kubectl logs -f nginx-ingress-controller-5694ccb578-l82hc --namespace ingress-nginx ... Error obtaining Endpoints for Service "<namespace>/<your-app-service>": no object matching key "<namespace>/<your-app-service>" in local store ...

Let’s see what endpoints we have:

$ kubectl get endpoints --namespace <namespace> ... <namespace> <your-app-service> <none> 21h ...

Indeed, our service have no endpoints. That means that a Service deployed to expose your app’s pods doesn’t actually have a virtual IP address. There are two cases when a service doesn’t have an IP: it’s either headless or you have messed up with label selectors. Check your label selectors carefully! You know what you’re doing when using headless services. So most likely it’s a wrong label name or value that doesn’t match your app’s pods! So was in my own case, by the way.

Once you fixed your labels reapply your app’s service and check endpoints once again:

$ kubectl get endpoints --namespace <namespace> ... <namespace> <your-app-service> 10.244.0.136:8080,10.244.0.144:8080,10.244.0.185:8080 21h ...

Now our service exposes three local IP:port pairs of type ClusterIP! ClusterIP is a service type that fits best to the setup with an Ingress Controller and a Load Balancer routing external traffic to it.

Request to a service now returns 200 OK.